WASHINGTON -- Gallup, which has long touted itself as the most trusted survey brand in the world, is facing a crisis. If Barack Obama's reelection in November was widely considered a win for data crunchers, who had predicted the president's victory in the face of skeptical pundits, it was a black mark for Gallup, whose polls leading up to Election Day had given the edge to Republican nominee Mitt Romney.

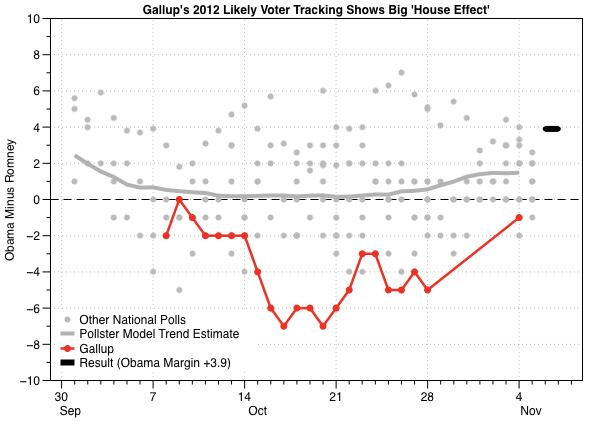

Obama prevailed in the national popular vote by a nearly 4 percentage point margin. Gallup's final pre-election poll, however, showed Romney leading Obama 49 to 48 percent. And the firm's tracking surveys conducted earlier in October found Romney ahead by bigger margins, results that were consistently the most favorable to Romney among the national polls.

Since the election, the Gallup Poll's editor-in-chief, Frank Newport, has at times downplayed the significance of his firm's shortcomings. At a panel in November, he characterized Gallup's final pre-election poll as "in the range of where it ended up" and "within a point or two" of the final forecasts of other polls. But in late January, he announced that the company was conducting a "comprehensive review" of its polling methods.

There is a lot at stake in this review, which is being assisted by University of Michigan political scientist and highly respected survey methodologist Michael Traugott. Polling is a competitive business, and Gallup's value as a brand is tied directly to the accuracy of its results.

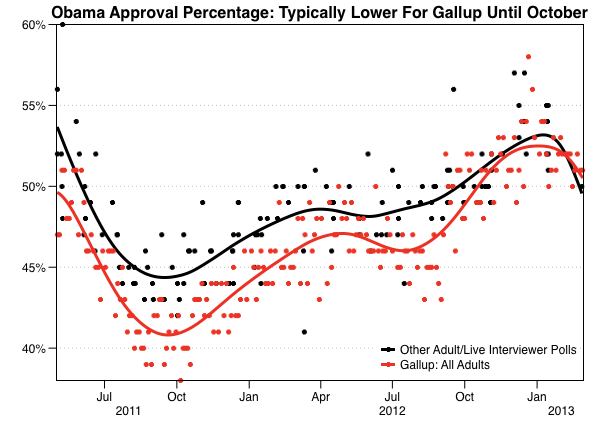

The firm's reputation had already taken a hit last summer when an investigation by The Huffington Post revealed that the way Gallup accounted for race led to an under-representation of non-whites in its samples and a consistent underestimation of Obama's job approval rating, prompting the firm to make changes in its methodology. (Since Gallup implemented those changes in October, the "house effect" in its measurement of Obama's job rating has significantly decreased.)

And in January, Gallup and USA Today ended their 20-year polling partnership. While both parties described the breakup as amicable, the pollster's misfire on the 2012 election loomed large in the background.

Over the years, Gallup's business has grown and evolved into much more than public opinion polling. The company currently describes itself primarily as a "performance management consulting firm," and the Gallup Poll is just one of its four divisions. Yet Gallup's reputation as the nation's premier public opinion pollster remains central to its business, helping it win millions of dollars in contracts with the federal government, for which the firm conducts research and collects data.

That portion of Gallup's business is coming under a different sort of pressure. In November, the Justice Department joined a whistleblower lawsuit filed by a former employee accusing the firm of overcharging taxpayers by at least $13 million in its federal contracts.

Despite the election results being hailed as a victory for pollsters generally, Gallup's shortcomings have also led some to question whether the methods of all national polling firms are outdated.

From the Obama campaign, which supplemented traditional polling methods with advanced data analytics drawn from public voting records, the criticism was more pointed. "We spent a whole bunch of time figuring out that American polling is broken," Obama campaign manager Jim Messina told a post-election forum. The reelection team's internal numbers told a different story about trends in the fall and accurately forecast the outcome, leading Messina to argue that "most of the public polls you were seeing were completely ridiculous."

An assessment of Gallup's recent struggles shows that its problems measuring the electoral horse race in 2012 were more severe, but similar in nature, to those faced by many other media polls. The firm's internal review, therefore, offers Gallup a chance not only to identify what went awry in 2012, but also to help the public understand how polling works -- and sometimes doesn't -- in the current era. In particular, the review could help shed light on two major problem areas for polling firms today: how they treat their "likely voter" models and how they draw their samples from the general population.

Newport told HuffPost that although the "major purpose" of Gallup's review is to "focus on our practices and procedures," it may also "shed some light on factors operative in this election which may have affected pre-election polls more generally."

Gallup has a chance both to reassert its position at the top of the field and to restore faith in all similar national polls -- if it confronts this review with transparency and seriousness of purpose.

CHOOSING 'LIKELY VOTERS'

Since the 2012 election, much of the speculation about Gallup's miss has centered on the likely voter model the firm uses to select survey participants, a method it has applied with only minor modifications since the 1950s.

The basic idea is straightforward: Gallup uses answers to survey questions to identify the adult respondents who seem most likely to vote. In practice, that means asking a series of questions about voter registration, intent to vote, past voting, interest in the campaign and knowledge of voting procedures -- all characteristics that typically correlate with a greater likelihood of actually casting a ballot -- and combining responses to those questions into a seven-point scale. Those respondents who score highest on the scale are classified as "likely voters," after Gallup makes a judgment call about its cutoff point -- that is, the percentage of adults that best matches the probable level of voter turnout.

Until the fall of an election year, most national pollsters choose to report their survey results for the larger population of self-described registered voters. But in the final weeks of the campaign, Gallup and others shift to the narrower segment of likely voters, which has typically made their estimates more accurate by filtering out registered voters who aren't likely to go to the polls on Election Day.

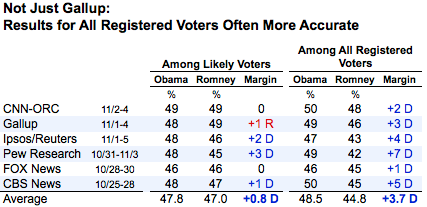

What went wrong in 2012? One possibility is that Gallup set its cutoff point too low, including too few people. While Gallup's final poll gave Romney a 1 point edge among likely voters, the results from the same poll for all registered voters gave Obama a 3 point lead (49 to 46 percent), very close to the president's actual margin of victory of 3.9 points.

Gallup was not alone on this score. Of five other national pollsters that reported results for both likely and registered voters on their final surveys, only the Pew Research Center made its results more accurate by narrowing from registered to likely voters. The average of all six pollsters had the final Obama lead almost exactly right among registered voters (3.7 percentage points), but too close (0.8 percentage points) among likely voters.

One theory as to why the pool of self-described registered voters so closely resembled the actual electorate is that many non-likely voters were, in effect, already screening themselves out -- by opting out of the survey. As the Pew Research Center reported in May 2012, actual voters are already more likely to respond to its surveys, while non-voters are more likely to hang up. "This pattern," Pew wrote, "has led pollsters to adopt methods to correct for the possible over-representation of voters in their samples."

In the case of Pew Research, one such correction is setting the cutoff used to determine likely voters at a slightly higher level than the turnout Pew actually expects. In 2012, for example, the pollster expected a 58 percent turnout among registered voters, but set the cutoff level at 63 percent of registered-voter respondents to compensate for the presumed non-response bias.

While Gallup has detailed the workings of its likely voter model, the firm has not yet published information about either the cutoff percentage it used or the response rates achieved by its surveys in 2012. A complete review, made public, could shed light on this issue.

Another source of criticism of Gallup's likely voter model is its reliance on self-reported interest in the election. In a review conducted after the 2012 election, Republican pollster Bill McInturff shared data from a survey his company conducted in California in 2010. It found that while those who rated their interest in the election the highest were the most likely to vote, roughly 40 percent of those with lower reported interest -- those who rated their interest as four or lower on a 10-point scale -- still voted in the 2010 elections.

Perhaps more to the point: McInturff reported that among respondents who actually voted from his 2010 surveys in California, older and white voters expressed much greater interest in the election than younger and non-white voters.

"It is clear, a traditional Likely Voter Model based only on self-described interest and self-described likelihood to vote missed the scope of the turnout of 18-29 year olds and Latinos in 2012," McInturff wrote.

Interest in the campaign is just one of seven turnout indicators that Gallup uses in its model, and pollsters have long understood that although their likely voter models typically make their results more accurate, they often misclassify whether individual voters will or will not vote. But a more complete investigation based on Gallup's extensive data would provide more clues about why its likely voter model had Romney ahead, as well as why other pollsters understated Obama's margin of victory to a lesser degree.

LANDLINES AND CELL PHONES

There is one important methodological difference between Gallup and other pollsters that nearly everyone missed in 2012 and that may explain -- at least in part -- why Gallup's numbers went wrong. It involves a significant change in the way Gallup draws its samples, first implemented in April 2011, that no other national polling firm has yet adopted.

The change is part of a larger story about the immense challenges now facing sampling procedures that have been standard for decades. Since news organizations first started conducting polls via telephone in the late 1960s and early 1970s, their samples have typically been drawn using a method known as "random digit dialing" (RDD).

The idea is to start with a random sample of telephone number prefixes or "exchanges" (the 555 in 202-555-1212) and then, for each selected prefix, randomly generate the last four digits to form a complete number. (The process is more complex in actual practice, but that's the basic gist.)

The rationale for RDD is that it creates random samples of all working phone numbers, both listed and unlisted. By contrast, samples drawn from published directories (i.e., the white pages) miss a significant chunk of households with unlisted numbers. As of 2011, 45 percent of U.S. households were not included in published phone directories, according to the sampling vendor Survey Sampling International.

The RDD sampling procedure works similarly for mobile phones, since most mobile numbers are assigned to exchanges reserved for that purpose. So most national media polls now combine two RDD samples, one of landline phones and a second of cell phones.

The rapidly changing patterns in phone use in recent decades have also increased pollster costs. RDD sampling has always been inefficient, because some portion of the randomly generated numbers are inevitably non-working, and the process of accurately sorting out the live numbers is time-consuming and expensive. Over the years, however, the greater cost of polling by cell phone and a steady decline in the efficiency of the sampling process have combined to make traditional RDD methods significantly more expensive.

Along the way, pollsters have nibbled around the edges of their RDD methods in search of acceptable tweaks that might hold down costs. Prominent national media pollsters have typically been cautious about more radical changes. Most, for example, eschew the use of so-called predictive dialing -- the annoying technology that only connects a live interviewer once the respondent picks up the phone and says "hello" -- because of concerns that potential respondents will just hang up. (You've likely experienced such annoyance yourself if you've ever answered your phone and then waited for a telemarketer or automated voice to come on the line.)

In recent years, however, a team of survey researchers at the University of Virginia (UVA) noticed a potentially cost-cutting silver lining in the massive growth of cell phone usage: Most of the Americans with unlisted landline numbers now have mobile phone service. So it may be possible, at least in theory, to reach virtually all adults with a combination of RDD samples of mobile phones and of listed landline phones.

Moving from randomly generated numbers to listed numbers would save pollsters time and money, since most calls to landlines would reach live numbers and the callers would spend far less time dialing non-working numbers that ring endlessly without answer.

As of 2006, the UVA researchers found that this combination could theoretically reach 86 percent of U.S. adults, but the rapid growth of cell phone usage has increased that number significantly. Two years later, they conducted field tests showing this combined sampling method could theoretically reach all but 1 to 2 percent of adults in three counties in Virginia.

The study caught the attention of the methodologists at Gallup, whose investment in standard RDD interviewing is substantial. Since early 2008, Gallup has partnered with the "global well-being company" Healthways to conduct the Gallup Daily, a tracking survey of 3,500 adults that encompasses both political questions like presidential job approval and the Gallup-Healthways Well-Being Index. The survey launched using the increasingly expensive combination of RDD calls to mobile and listed and unlisted landline phones. Healthways has committed to fund the project for 25 years.

In April 2011, however, Gallup began drawing the landline portion of its samples for the Gallup Daily and other surveys from phone numbers listed in electronic directories. At the time, the only indication of a change was a two-sentence description that began appearing in the methodology blurb at the bottom of articles on Gallup.com: "Landline telephone numbers are chosen at random among listed telephone numbers. Cell phones numbers are selected using random digit dial methods."

Newport, the Gallup editor-in-chief, told HuffPost that the switch was made after internal "analysis and pre-test research" confirmed the findings of the UVA study. "There were very few landline unlisteds who were landline only, 2-3 percent," he wrote via email, "and likely to decline in the future."

To make this new sampling method work, Gallup began "weighting" up a small percentage of respondents -- those interviewed by cell phone who say they also have an unlisted landline -- to compensate for the missing 2 to 3 percent of adults who are totally out of reach -- those with an unlisted landline and no cell phone. To accommodate this additional weighting, Gallup boosted the number of cell phone interviews from 20 to 40 percent of completed calls.

On its face, that compromise seems reasonable. But it requires Gallup to weight its data more heavily than other national pollsters.

That heavier weighting likely exacerbated a problem HuffPost identified in its June 2012 investigation of Gallup, which showed that the "trimming" of especially large weights explained why the firm consistently failed to match its own targets for race and Hispanic origin. The effort to reduce weighting is also partly why Gallup chose to increase the percentage of calls placed to cell phones again, in October 2012, to 50 percent -- a larger percentage than used by most other media pollsters last year. As Newport said at the time, the change would allow for smaller weights and thus "provide a more consistent match with weight targets."

MISSING THE UNLISTED

Does this aspect of Gallup's methodology explain why it showed a pronounced house effect late in the presidential race? "Our preliminary research on the election tracking," Newport said, "suggests that this did not have a significant impact on our election estimates."

Gallup has not publicly released any of the raw data it collected for pre-election surveys in October or November 2012. To try to check Newport's assertion, HuffPost reviewed survey data collected by the Democratic-sponsored polling organization Democracy Corps as part of a pre-election report on the importance of cell phone interviewing.

Like many other media pollsters, Democracy Corps called RDD samples of both cell phones and landlines, but its sample vendor indicated which of the selected numbers were also listed in published directories. This extra bit of information can help give us a sense of the degree to which missing unlisted-landline-only households might have affected Gallup's samples.

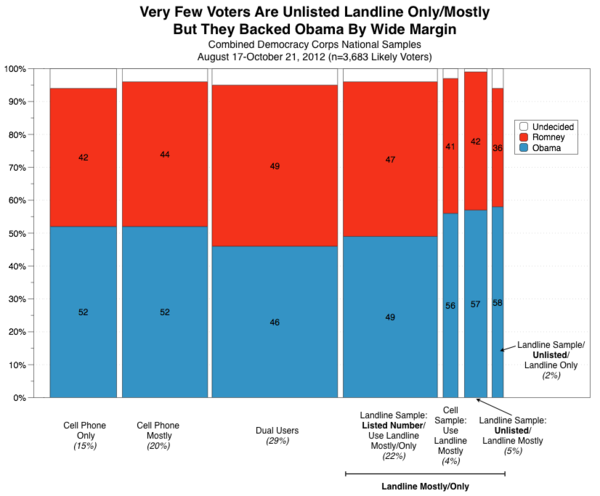

The following chart illustrates the most important tabulation from the Democracy Corps data. On the one hand, the households that Gallup misses altogether -- those with an unlisted landline and no cell phone -- supported Obama over Romney by a lopsided 22 point margin (58 to 36 percent). On the other hand, this subgroup is tiny, just 2 percent of all likely voters, and would have little effect on the overall vote estimate even if it were missed completely.

Although the Democracy Corps data generally back up Newport's assertion that listed directory sampling did not significantly impact Gallup's election numbers, he nonetheless confirmed that it is "one of the elements we are reviewing and one of several areas where we will be conducting additional experimental research."

And for good reason. Anything as unusual as Gallup's methodological change deserves a closer look, because any sample design that leaves people out is something that should be scrupulously examined.

"I'm glad that Gallup wants to help explain what happened and they're taking a rigorous approach to looking at their methods which are different than they had been," said Andrew Kohut, founding director of the Pew Research Center. "There's every reason to see if the changes in those methods have accounted for how their poll did."

But like other pollsters HuffPost interviewed for this story, Kohut questioned Gallup's assumptions and the added complexity of the weighting scheme required to compensate for the potentially missing respondents. "It's hard enough to take into account the right ratio between the people who are both from the cell and landline. Now you're adding another dimension, [which is] very complicating," he said.

At issue is not just the 2 to 3 percent whose only phone is an unlisted landline, but also the larger number with an unlisted landline and a cell phone who rarely or never answer calls from strangers on their cell phones. The Democracy Corps data indicate that those voters who said they used their unlisted landline for most calls were as heavily pro-Obama as those who had only an unlisted landline. Did Gallup's procedure account for bias against those dual users who are much easier to reach via landline?

Also, Gallup relies on its respondents to self-report their use of an unlisted landline. Some might not know whether their number is listed in a telephone directory. To what extent did Gallup test the accuracy of those self-reports?

Finally, even if the impact of the listed directory sampling is minor, it may have worked in concert with other small errors in Romney's favor to create Gallup's 2012 problems. All surveys are subject to small, random design errors that usually cancel each other out. It's when a series of small errors affect the data in the same direction that minor house effects can turn into significant errors.

TRANSPARENCY RENEWED?

Newport initially portrayed Gallup's post-election review as a routine examination, but his more recent announcement of Traugott's involvement and the comprehensive nature of the review suggests something less ordinary.

Traugott led an evaluation of polling misfires during the 2008 presidential primaries, which was undertaken by the American Association for Public Opinion Research and marked by its commitment to transparency. AAPOR asked the public pollsters involved to answer extensive questions about their methodologies and published their responses. Gallup was one of a handful of organizations that went the extra mile and provided Traugott's committee with the raw data gathered from individual respondents, along with permission to deposit those data in a publicly accessible archive.

That openness was consistent with Gallup's history. In 1967, founder George Gallup first proposed the "national standards group for polling" that became the National Council on Public Polls. Among other things, George Gallup wanted pollsters to commit to sharing "technical details that would help explain why polling results of one organization do not agree with those of another, when they differ." He also played a leading role in establishing the Roper Center Public Opinion Archives, where Gallup and other public pollsters have long deposited their raw data to be used in scholarly research and to provide a "public audit of polling data."

Kohut -- who began his career at Gallup and once served as its president -- underscored the continuing importance of transparency. Ordinary Americans may not "understand the ins and outs of [survey] methods," he said, but they need reassurance that differences between polls "are accounted for by methodological factors rather than based upon the political judgments of the people who run these polls."

Will Gallup's report on 2012 include a public release of the raw data from the final month of its presidential tracking poll? "We may certainly consider that," Newport said via email, noting that "we at Gallup will be writing up our conclusions and sharing them with interested parties."

Given the scrutiny that has fallen upon pollsters for last year's presidential predictions, let's hope the "interested parties" include all of us.

Also on HuffPost:

"; var coords = [-5, -72]; // display fb-bubble FloatingPrompt.embed(this, html, undefined, 'top', {fp_intersects:1, timeout_remove:2000,ignore_arrow: true, width:236, add_xy:coords, class_name: 'clear-overlay'}); });

Source: http://www.huffingtonpost.com/2013/03/08/gallup-presidential-poll_n_2806361.html

the situation tim tebow jets katy perry part of me video photoshop cs6 beta cate blanchett nfl news tebow

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.